Introduction#

Probability is the study of the properties of random events.

Preliminaries#

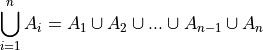

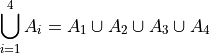

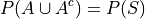

Compound Union#

- Symbolic Expression

A symbol that represents the union of a sequence of sets.

- Example

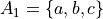

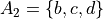

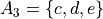

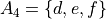

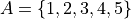

Let A, B, C and D be sets given by,

Then,

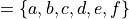

- Summation

- Symbolic Expression

Sometimes written as,

Where B is a set of elements.

A symbol that represents the sum of elements  .

.

- Example

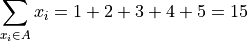

Let the set A be given by,

Then,

Note

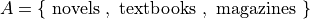

The sum  is only defined if the set it is summing contains only numerical elements. It makes no sense to take about the sum of elements with a set like,

is only defined if the set it is summing contains only numerical elements. It makes no sense to take about the sum of elements with a set like,

Definitions#

- Experiment

An uncertain event.

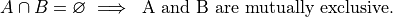

- Mutual Exclusivity

Two sets, A and B, are mutually exclusive if they are disjoint.

- Outcomes

(lower case letters)

(lower case letters)

A possible way an experiment might occur.

- Sample Space

The set of all possible outcomes for an experiment.

Note

The sample space is simply the Universal Set in probability’s Domain of Discourse.

- Events

(upper-case letters)

(upper-case letters) (upper-case letters with subscripts)

(upper-case letters with subscripts)

A subset of the sample space, i.e. a set of outcomes.

- Probability

A numerical measure of the likelihood, or chance, that an event A occurs.

Sample Spaces and Events#

The sample space for an experiment is the set of everything that could possibly happen.

Motivation#

Note

By “fair”, we mean the probability of all outcomes are equally likely.

Consider flipping a fair, two-sided coin. The only possible outcomes to this experiment are heads or tails. If we let h represent the outcome of a head for a single flip and t represent the outcome of a tail for a single flip, then the sample space is given by the set S,

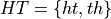

Events can be defined as subsets of the sample space. If we let H represent the event of a head and if we let T represent the event of a tail, then clearly,

Be careful not to confuse the outcome h with the event H, and likewise the outcome t with the event T. They have different, but related, meanings. The outcomes h and t are individual observables; they are physically measured by flipping a coin and observing on which side it lands. An event, on the other hand, is a set, and sets are abstract collections of individual elements. In this case, the sets are singletons, i.e. the sets H and T only contain one element each, which can lead to confusing the set for the outcome. Let us extend this example further, to put a finer point on this subtlety.

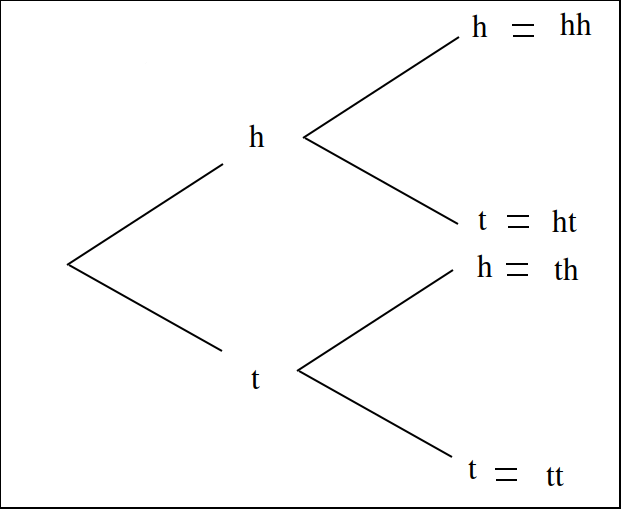

Consider now flipping the same fair, two-sided coin twice. A tree diagram can help visualize the sample space for this experiment. We represent each each flip as a branch in the tree diagram, with each outcome forking the tree,

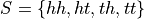

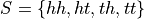

The outcomes of the sample space are found by tracing each possible path of the tree diagram and then collecting them into a set,

In this example, there is no simple correspondence between the events defined on the sample space and the outcomes within those events, as in the previous example.

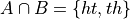

Take note, the sequence of outcomes ht is different than the sequence of outcomes th. In the first case, we get a head and then we get a tail. In the second case, we get a head and then we get a tail. Therefore, ht and th represent two different outcomes that correspond to the same event. Let us call that event the set HT. HT represents event of getting one head and one tail, regardless of order. Then, HT has exactly two outcomes (elements),

When one of the outcomes ht or th is observed, we say the event HT occurs.

It is important to keep in mind the distinction between events and outcomes. The differences are summarized below,

Outcomes are elements. Events are sets.

Outcomes are observed. Events occur.

Compound Events#

A compound event is formed by composing simpler events with Operations.

- Example

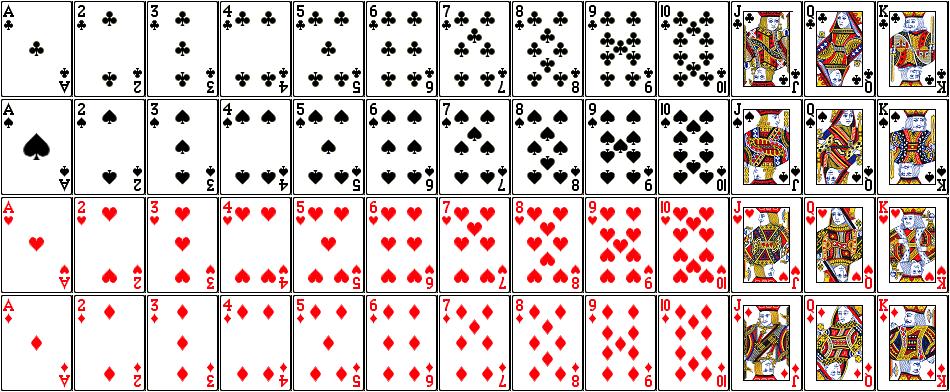

Consider the experiment of drawing a single card at random from a well-shuffled, standard playing deck. Let A represent the event of drawing a 2. Let B represent the event of drawing a heart.

The meaning of a few different compound events is considered below,

This compound event represents the event of getting a 2 of hearts.

This compound event represents the event of getting a 2 of hearts. This compound event represents the event of getting a 2 or a heart.

This compound event represents the event of getting a 2 or a heart. This compound event represents the event of getting any card except a 2.

This compound event represents the event of getting any card except a 2. This compond event represents the event of getting a two that is not a heart.

This compond event represents the event of getting a two that is not a heart.

Classical Definition of Probability#

Returning to the experiment of flipping a fair coin once, we have a sample space and two events, H and T, defined on that sample space,

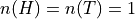

The cardinalities of these sets are given by,

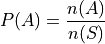

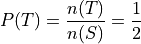

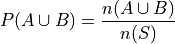

A natural way to define probability of an event is as the ratio of the cardinality of that event to the cardinality of the sample space. This leads to the following definition of the probability of event A,

In plain English,

The probability of an event A is the ratio of the number ways A can occur to the number of ways all the outcomes in the sample space S can occur.

Another way of saying the same thing,

The probability of an event A is the ratio of the cardinalities of the set A and the sample space S.

This is called the classical definition of probability.

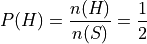

Applying this definition to the events H and T in the first example, it can be seen to conform to the intuitive notions of probability, namely that equally likely events should have the same probability. Intuitively, if the coin being flipped is fair, the probability of either event H or T should be equal.

Axioms of Probability#

The classical definition of probability suffices for a general understanding of probability, but there are cases where it fails to account for every feature we would expect a definition of probability to satisfy.

To see this, consider the experiment of spinning a dial on a clock with radius r,

(INSERT PICTURE)

The dial can land at any point between 0 and the circumference of the clock,  . Between 0 and

. Between 0 and  , there are an infinite number of numbers (0, 0.01, 0.001, 0.001, …, 1, 1.01, 1.001, …, etc., … ,

, there are an infinite number of numbers (0, 0.01, 0.001, 0.001, …, 1, 1.01, 1.001, …, etc., … ,  ) ; What is

) ; What is  when the sample space of outcomes is infinitely large? The classical definition of probability is unable to answer this question.

when the sample space of outcomes is infinitely large? The classical definition of probability is unable to answer this question.

For this reason and other similar cases, the classical definition of probability is not sufficient to completely determine the nature of probability. This leads to the axiomatization of probability, which acts as additional constraints any model of probability must satisfy in order to be considered a probability.

Note

We will see in a subsequent section, when we discuss the uniform distribution, while we cannot calculate the probability of the dial exactly landing on a given number, we can calculate the probability the dial lands within a certain interval (that is to say, a certain arc length of the clock’s circumference).

Axioms#

Axiom 1#

All probabilities are positive; No probabilities are negative.

Axiom 2#

The probability of some outcome from the sample space S occuring is equal to 1.

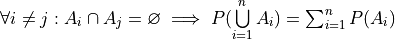

Axiom 3#

If each event i A in the sample space S is mutually exclusive with every other event

, then the probability of the union of all of these events is equal to the sum of the probabilities of each individual event.

, then the probability of the union of all of these events is equal to the sum of the probabilities of each individual event.

Axiom 1 and Axiom 2 are fairly intuitive and straight-forward in their meaning, while Axiom 3 takes a bit of study to fully appreciate. To help in that endeavor, consider the following example.

- Example

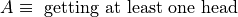

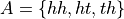

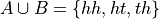

Let us return again to the experiment of flipping a fair coin twice. Consider now two different events A and B defined on this sample space,

Find the probability of

.

.

The sample space S of this experiment was given by,

Then, in terms of outcomes, clearly, these events can be defined as,

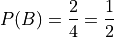

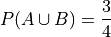

And, using the Classical Definition of Probability, the probabilities of these events can be calculated by,

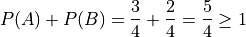

Axiom 3 tells us how to compute  ; it tells us the probability of the union is equal to the sum of the individual probabilities. However, if we try to apply Axiom 3 here, we wind up with a contradiction,

; it tells us the probability of the union is equal to the sum of the individual probabilities. However, if we try to apply Axiom 3 here, we wind up with a contradiction,

Here is a probability greater than 1, which cannot be the case. What is going on?

The issue is the condition that must be met to apply Axiom 3; the events A and B must be mutually exclusive,  , while in this example we have,

, while in this example we have,

In other words, A and B are not mutually exclusive here. Therefore, we cannot say the probability of the union of these two events is equal to the sum of the probabilities of each individual event. In fact, in this example,

And therefore, by the Classical Definition of Probability,

Which is clearly not greater than 1.

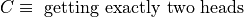

If, instead, we consider the event C,

Then, the outcomes of C are,

And the probability of the event C,

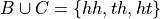

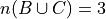

Then, the compound event  is found by aggregating the outcomes in both of the individual events B and C into a single new set,

is found by aggregating the outcomes in both of the individual events B and C into a single new set,

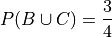

So the probability of the compound event  is calculated as,

is calculated as,

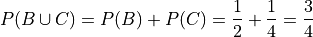

Notice  , i.e. B and C are mutually exclusive, so by Axiom 3, we may also decompose this probability into its individual probabilities,

, i.e. B and C are mutually exclusive, so by Axiom 3, we may also decompose this probability into its individual probabilities,

In this case, the two methods of finding the probabilities agree because the condition (or hypothesis) of Axiom 3 was met, namely, that the events are mutually exclusive. If the condition (or hypothesis) of Axiom 3 is not met, then its conclusion does not follow.

Theorems#

We can use these axioms, along with the theorems of set theory <set_theorems> to prove various things about probability.

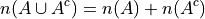

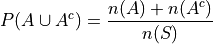

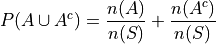

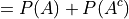

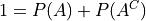

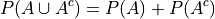

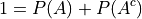

Law of Complements#

- Symbolic Expression

This corollary should be intuitively obvious, considering the Venn Diagramm of complementary sets,

If the entire rectangle encompassing set A in the above diagram is identified as the sample space S, then the theorem follows immediately from Axiom 2, namely,  .

.

Warning

The converse of this theorem is not true, i.e. if two events A and B have probabilities that sum to 1, this does not imply they are complements of one another.

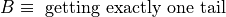

To see an example of what that pesky warning is talking about, consider flipping a fair, two-sided coin twice. Let A be the event of getting a head in the first flip. Let B of getting exactly one head in both flips.

The outcomes of A are given by,

While the outcomes of B are given by,

In this case,

But A and B are not complements. To restart this result in plain English,

The sum of the probability of complementary events is equal to 1; The converse does not hold, namely if the sum of probability of events is equal to 1, the events in question are not necessarily complements.

Two equivalent formal proofs of this theorem are given below. Choose whichever one makes more sense to you.

- Proof #1

By the Classical Definition of Probability, the probability of

is given by,

is given by,

By Counting Theorem 1,

So, the probability of

is,

is,

Distributing

,

,

Applying the Classical Definition of Probability to both terms on the right hand side of the equation,

On the other hand, by Complement Theorem 2,

By Axiom 2,

Putting it altogether,

- Proof #2

By Complement Theorem 3,

Therefore,

and

and  are mutually exclusive. So by Axiom 3, we can say,

are mutually exclusive. So by Axiom 3, we can say,

But, by Complement Theorem TWo,

And by Axiom 2,

So,

- Example

Find the probability of atleast getting at least one head if you flip a coin 3 three times.

TODO

Law of Unions#

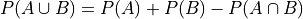

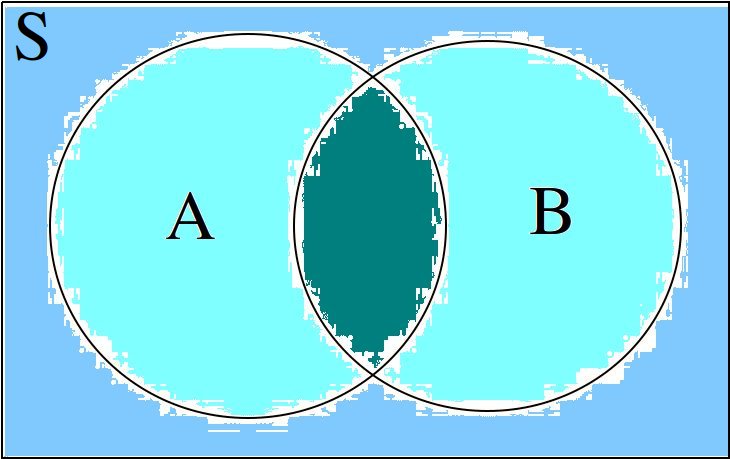

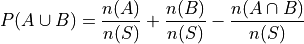

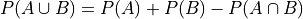

- Symbolic Expression

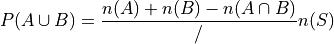

Again, from inspection of a Venn Diagram of overlappying sets, this theorem should be obvious,

The union is the area encompassed by bother circles. When we add the probability of A (area of circle A) to the probability of B (area of circle B), we double-count the area  , so to correct the overcount, we must subtract once by the offending area.

, so to correct the overcount, we must subtract once by the offending area.

The formal proof Law of Unions follows directly from Counting Theorem 1 and the Classical Definition of Probability. The proof is given below.

- Proof

By the Classical Definition of Probability,

By Counting Teorem 1,

Distributing

,

,

Applying the Classical Definition of Probability to all three terms on the right side of the equation,

- Example

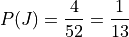

Consider a standard deck of 52 playing cards. Find the probability of selecting a Jack or diamond.

The sample space for a selecting a single card from a deck of 52 cards is shown below,

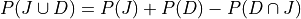

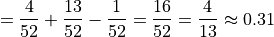

Let J be the event of selecting a jack. Let D be the event of selecting a diamond. This example wants us to find  .

.

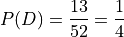

There are 4 Jacks and 13 Diamonds in a standard deck of cards. Therefore, the probability of the individual events is given by,

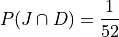

If we stopped at this point and simply added these two probability to find  , we would be counting the Jack of Diamonds twice, once when we found the probability of a Jack and again when we found the probability of a Diamond. To avoid double-counting this card, we first find,

, we would be counting the Jack of Diamonds twice, once when we found the probability of a Jack and again when we found the probability of a Diamond. To avoid double-counting this card, we first find,

Therefore, the desired probability is,

Probability Tables#

If you have two events,  and

and  , then you can form a two-way probability table by partitioning the sample space into

, then you can form a two-way probability table by partitioning the sample space into  and

and  and then simultaneously partitioning the sample space into

and then simultaneously partitioning the sample space into  and

and  ,

,

Events |

|

|

Probability |

|

|

|

|

|

|

|

|

Probabilitiy |

|

|

|